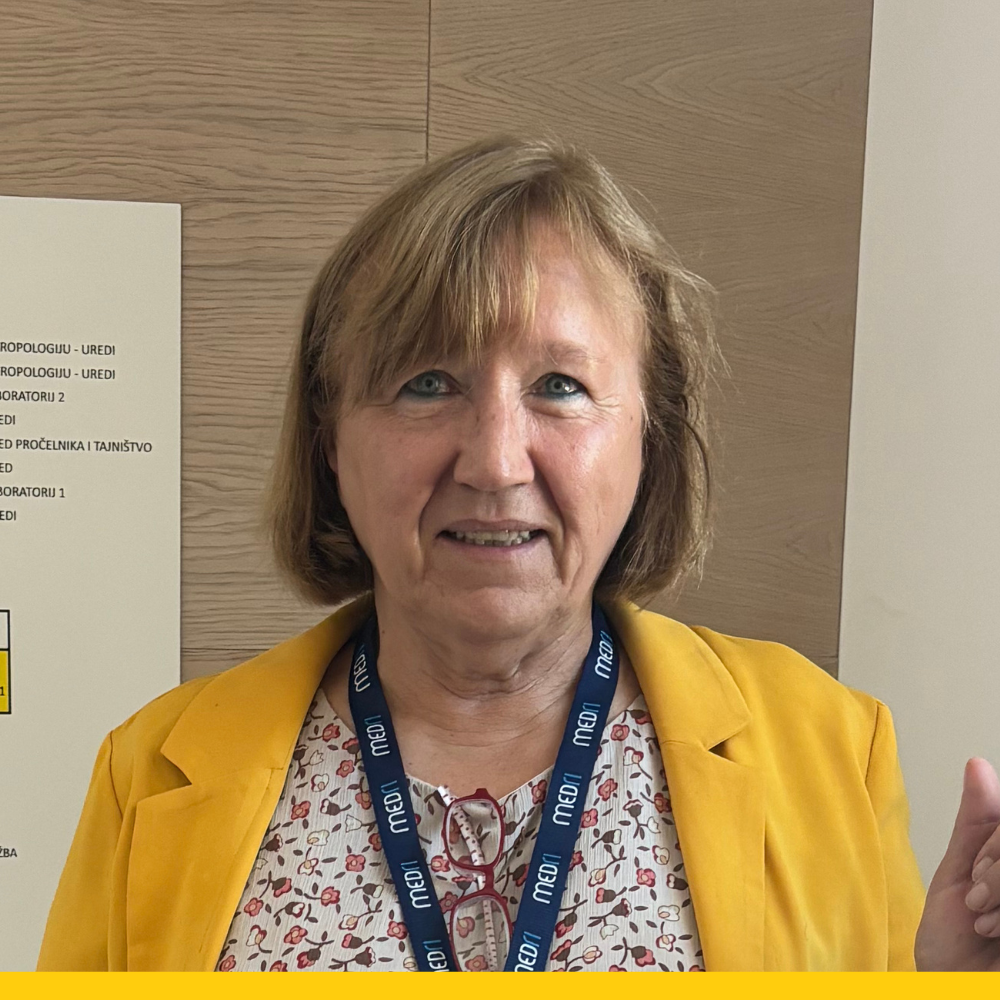

Jadranka Stojanovski is a retired Associate Professor who previously taught at the University of Zadar, Department of Information Sciences. She has more than 20 years of professional experience in designing open access and open science infrastructure and services organizing knowledge created by the Croatian research community. Previously, she worked as the head of the library at the Ruđer Bošković Institute. Jadranka has an interdisciplinary background, MSc in Physics and MA and PhD in Information Sciences gained at the University of Zagreb.

Jadranka’s research interests are in the field of open scholarly communication, more specifically in the peer review and research assessment, new trends in scholarly publishing, ethical issues, and research data. She is also involved in continuous education and has taught numerous lectures and workshops for researchers, journal editors, and librarians.

Beyond Proxies: Re-Evaluating Quality in Scholarly Communication for Meaningful Research Assessment

Quality in scholarly communication is a multifaceted and contested concept. For decades, the academic community has relied heavily on a limited set of quantitative indicators—publication counts, citation metrics, journal rankings, or publisher prestige—as shorthand measures of quality. These indicators have undoubtedly shaped academic cultures, career progression, and funding decisions, but their use as proxies for quality is problematic. They capture only a narrow dimension of scholarly activity, often distort incentives, and can inadvertently reward quantity over substance. Reliance on such indicators has also contributed to practices such as “publish or perish”, citation gaming, and overemphasis on journal brands rather than the content of research itself.

A more comprehensive understanding recognises that quality is embedded in practices, infrastructures, and cultures. It is not a static label applied at the point of publication, but a dynamic property that spans the entire research lifecycle. Quality must therefore be understood both as a process—how research is designed, conducted, reported, disseminated, and preserved—and as an outcome, in terms of credibility, reliability, and societal relevance.

In this presentation, I will discuss a six-dimensional framework of quality that seeks to make this complexity more visible.

Research quality encompasses rigour, reproducibility, originality, and ethical conduct. These features are fundamental to trustworthy knowledge creation and include robust methodological design, appropriate analysis, and adherence to established ethical standards.

- Reporting quality highlights the clarity, transparency, and completeness with which research is described. Adherence to reporting guidelines, transparent disclosure of limitations, and correct referencing are essential for enabling others to evaluate and reuse results.

- Publication and editorial quality refers to the integrity of the publishing process: fair and transparent peer review, strong editorial oversight, and reliable production processes including metadata accuracy and correction mechanisms. It also includes openness in licensing and the use of persistent identifiers.

- Communication quality concerns accessibility and dissemination. Research should be written clearly, made available in multiple languages where possible, and presented in ways that engage broader audiences, including policymakers, practitioners, and the public.

- Infrastructure and process quality acknowledge the technical and organisational environments that underpin scholarship. This involves FAIR-compliant data practices, sustainable repositories, transparent governance, and inclusive community ownership of infrastructures.

- Cultural and ethical quality captures the values and norms shaping research: integrity, equity, diversity, inclusiveness, and the safeguarding of academic freedom.

Peer review remains a central pillar of scholarly communication and evaluation. Within this framework, it can be seen in two complementary ways. First, it is a tool for evaluating research outputs, ensuring that research outputs are scrutinised for rigour, novelty, originality, and ethical soundness. Second, peer review itself must be recognised as a subject of quality assessment. Processes vary widely in fairness, transparency, timeliness, and constructiveness, and new models such as open peer review, post-publication commentary, and community-based reviewing are emerging to strengthen accountability and inclusiveness.

Over the past decade, numerous international initiatives have challenged the dominance of metrics and advanced Responsible Research Assessment (RRA). The San Francisco Declaration on Research Assessment (DORA) (2012) argues against using journal-based metrics to evaluate individuals. The Leiden Manifesto (2015) sets out ten principles for the responsible use of metrics, emphasising context, transparency, and diversity. The Coalition for Advancing Research Assessment (CoARA) (2022) brings institutions together to implement reforms in practice, encouraging narrative CVs, recognition of broader contributions, and reduced dependence on journal prestige. More recently, the Barcelona Declaration on Open Research Information (2024) has underlined the importance of open, transparent, and auditable data sources to ensure accountability in assessment. Collectively, these initiatives point towards qualitative, contextualised, and plural approaches to evaluation, recognising a wide range of contributions including datasets, software, community service, mentoring, and engagement.

The rise of Open Access publishing has been a milestone in widening access and visibility. Yet, it has not resolved systemic inequalities, as high article processing charges can exclude some researchers and institutions. Nor has it dismantled the commercial dominance of a handful of large publishers. To move beyond access alone, the broader agenda of Open Science must be embraced, incorporating open data, open methodologies, open peer review, and the FAIR principles. These practices reinforce transparency, reproducibility, and inclusivity, and align research more closely with societal needs.

Ultimately, research assessment must evolve alongside research itself. The assessment of quality should reflect the diversity of scholarly outputs, value contributions across the full spectrum of knowledge creation, and ensure fairness, particularly for early-career researchers and those in underrepresented regions or disciplines. It must also strengthen trust in science by embedding integrity, accountability, and openness at every stage.

By moving beyond proxies and embracing a multidimensional view, research communities can build responsible research assessment systems that are more equitable, sustainable, and aligned with the true purposes of scholarship: advancing knowledge, nurturing people and communities, supporting research cultures, and contributing positively to society.

keywords

Responsible Research Assessment (RRA); Research quality; Metric Indicators; Peer review; Open science; Scholarly communication

keywords

- Hicks, D., Wouters, P., Waltman, L., de Rijcke, S., & Rafols, I. (2015). The Leiden Manifesto for research metrics. Nature, 520(7548), 429–431. https://doi.org/10.1038/520429a

- CoARA. (2022). Agreement on Reforming Research Assessment. Coalition for Advancing Research Assessment. https://coara.eu

- Barcelona Declaration on Open Research Information. (2024). Barcelona Declaration. https://barcelona-declaration.org

- UNESCO. (2021). UNESCO Recommendation on Open Science. UNESCO. https://unesdoc.unesco.org/ark:/48223/pf0000379949

- Chan, T. T., Pulverer, B., Rooryck, J., & CoARA Working Group on Recognizing and Rewarding Peer Review. (2025). Recognizing and Rewarding Peer Review of Scholarly Articles, Books, and Funding Proposals: Recommendations by the CoARA Working Group on Recognizing and Rewarding Peer Review. Zenodo. https://doi.org/10.5281/zenodo.15968446

- Allen, L., Barbour, V., Cobey, K., Faulkes, Z., Hazlett, H., Lawrence, R., Lima, G., Massah, F., & Schmidt, R. (2025). A Practical Guide to Implementing Responsible Research Assessment at Research Performing Organizations. Declaration on Research Assessment (DORA). https://doi.org/10.5281/zenodo.15000683

- DORA San Francisco Declaration on Research Assessment, & Fonds National de la Recherche. (2021, rujan 7). Balanced, broad, responsible: A practical guide for research evaluators. DORA. https://doi.org/10.5281/zenodo.15267022

- Consortium of the DIAMAS project. (2025). The Diamond OA Standard (DOAS). Zenodo. https://doi.org/10.5281/zenodo.15227981